The AI Resource Handbook: A Blog Series - Introduction

Artificial intelligence is everywhere—and for good reasons. While it is not “intelligent” in the human sense, AI is a remarkable tool: a probability engine trained to detect patterns and predict the most likely next word, phrase, or response. Think of it as a supercharged autocomplete. When your phone suggests the next word in an email, it is not understanding you. It is drawing on millions of similar word combinations. AI works the same way, just on a much larger scale. For professionals integrating AI into their workflow, this distinction is empowering. AI will not replace your judgment or strategy, but it can manage repetitive tasks, sift through complex patterns, and generate options faster than any human could alone. It can be intimidating at first, and it may take some experimentation to get it right—but once you understand how to guide it, AI becomes a fast, flexible assistant that unlocks new levels of productivity and creativity.

Why AI Does Not “Understand” Practice

If you were to ask AI about building codes, zoning, or compliance rules, you will find that its responses often feel trustworthy simply because they sound polished and professional, but that does not make them entirely correct. Professionals in the field know that one code section can be interpreted differently across jurisdictions, or that a regulation’s impact depends on the client’s specific circumstances. AI does not know that. Its job is not to consider a more granular or specific context. It is simply predicting the words most likely to follow your question. While a human expert can spot the difference between an outdated code reference and a current one, AI cannot. It might hand you both in the same response with equal confidence. Without your judgment layered on top, you are left with answers that sound reliable but may steer a project in the wrong direction.

The Liability Bias: Why AI Plays It Safe

AI’s responses often feel overly cautious, especially with practical tasks. Ask it how to change a light switch, and it will recommend contacting a licensed electrician, obtaining a permit, shutting off power, and even alerting the energy company. A skilled electrician, however, can often complete the job in minutes, often without even turning off the power. What is the reason for this discrepancy? The caution in AI’s responses to such questions comes from its training data. Large organizations only publish guidance they are willing to assume liability for, so instructions for potentially hazardous tasks rarely appear—even when these tasks are routine for professionals. AI will always advocate strict compliance, multiple permits, and licensed personnel. Experienced practitioners know when rules can be navigated, when permits can be expedited, and when certain requirements can be interpreted with judgment. AI lacks this nuanced, practical knowledge that comes from real-world experience.

The Deck Analogy

Let’s use the example of a contractor building a deck over living space (like a patio over a bedroom). This is common and accepted practice, with known assemblies recommended by architects and designers. Each assembly involves multiple parts: membranes, caulking, flashing, fasteners, etc. Here is the tricky part:

- The caulking manufacturer says: “Not compatible with X membrane system.” •

- The membrane manufacturer says: “Not compatible with Y flashing product.”

- Each component supplier does the same.

Why? Because if the deck fails—often causing leaks and damage—no one wants to be held liable for the overall system. Each stands behind their piece only under perfect conditions. Even though professionals know how these decks are built, there is no single, liability-free document that guarantees a fully approved assembly. Every source protects itself.

How That Relates to AI

AI faces the same challenge. It compiles knowledge from countless sources, each with its own disclaimers, omissions, and blind spots shaped by liability. Just as the contractor cannot find a 100% liability-backed deck system, AI cannot find a 100% liability-backed version of truth. Its outputs are shaped by the caution and constraints of the original sources, making built-in flaws unavoidable. AI will always err on the side of caution, prioritizing “safe” answers over what might be practical in real-world applications. Understanding this dynamic is essential for professionals who want to use AI effectively. It is a tool for guidance and efficiency, not a replacement for human judgment.

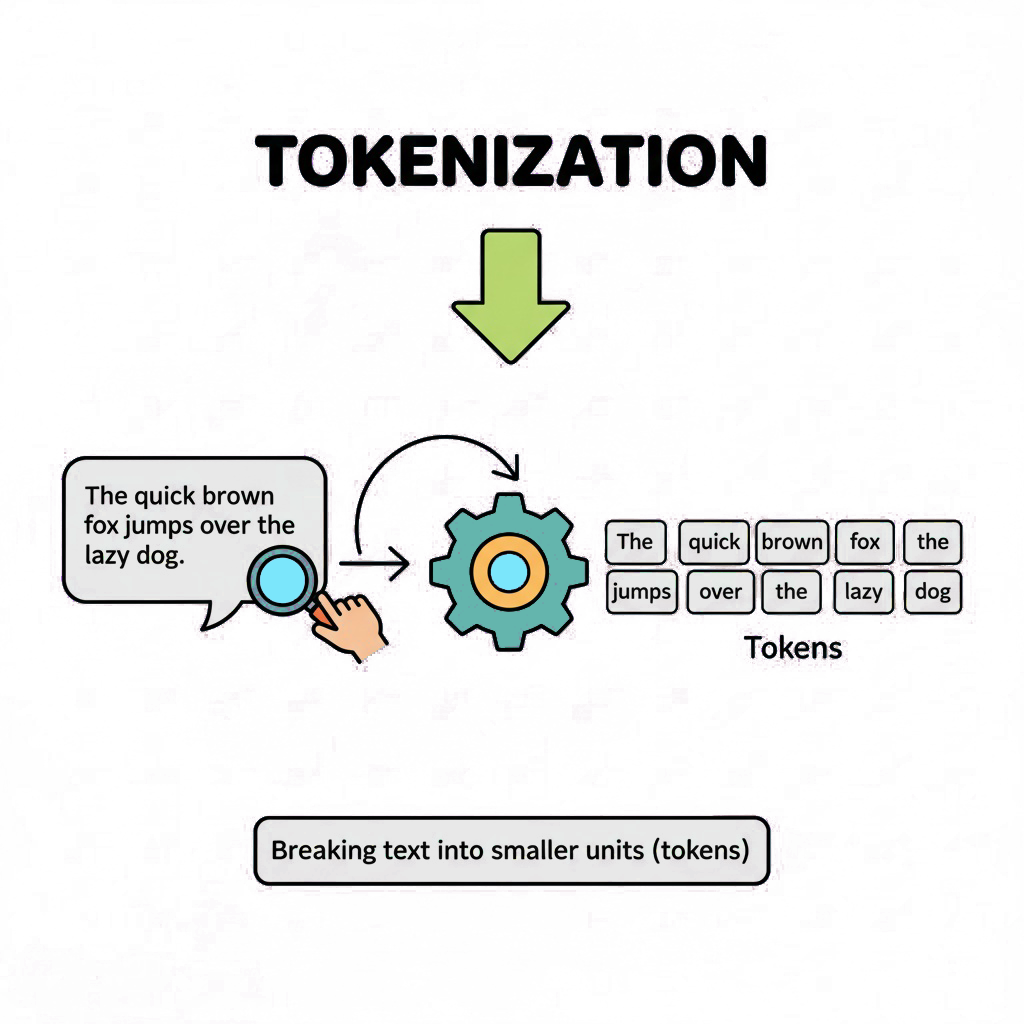

What is Tokenization?

Tokenization is the process of breaking text into smaller, machine-readable units called tokens. Depending on the system, tokens might be individual words, characters, or subword fragments. AI models don’t “see” text as humans do—they process these tokens sequentially. This matters because how text is split into tokens directly affects how rules and patterns are interpreted.

Sub-Word Tokenization

A subtle example is how a long word like “artificial” might be tokenized. Instead of being treated as a single whole word, it could be split into smaller subword pieces such as "art", "ificial", or even "arti", "fic", "ial" depending on the tokenizer. This fragmentation means that instructions referring to “artificial” as a unit could misfire if the model interprets only part of the word as relevant. To avoid this problem when giving implicit rules, you want to reduce ambiguity: keep wording consistent, avoid relying on assumed “whole word” matches, and if possible, phrase instructions in ways that anticipate variations in how the model might split the text.

A Tokenization Problem

Take a look at the scenario in the right sidebar. The rules for navigating folders introduce overlapping interpretations of the same character (-). The AI has to tokenize the string "josh-09 - laura-34", but each rule suggests a different way to treat the tokens:

Name-based navigation sees "josh" as the token.

Ignore trailing numbers after a dash sees "josh-09" but tries to reduce it to "josh".

Subfolder separator with " - " sees " - " as a split marker between folders.

When tokenized, "josh-09 - laura-34" becomes a sequence like: ["josh", "-", "09", "-", "laura", "-", "34"] (depending on the tokenizer).

The conflict arises because:

The -09 wants to be discarded (rule 2).

The " - " wants to split into subfolders (rule 3).

The -34 also wants to be discarded (rule 2 again).

The AI can’t perfectly reconcile these overlapping instructions because the same dash token (-) plays different roles in different contexts. This is a tokenization ambiguity—the system may follow the “most probable” path it learned from training data, leading to unreliable or incorrect folder resolution.

BAD Tokens

Imagine you are setting up a project file system and ask AI to follow these rules for navigating folders:

1. Name-based navigation: Go to the folder that matches the name (e.g., "josh" → "josh" folder).

2. Ignore trailing numbers after a dash: Ignore anything after a minus sign (e.g., "josh-95" → still go to "josh" folder).

3. Subfolders indicated by “ - ”: Treat " - " (space-dash-space) as a subfolder separator (e.g., "josh - laura" → go to "laura" subfolder inside "josh").

Working With Probabilities: How to Get Better Results from AI

AI is not a colleague. It does not have the capacity to think alongside you; it is generating responses based on statistical likelihoods. The key to working with it effectively is building up your own ability to structure prompts to guide those probabilities toward useful outcomes. Think of AI less like a conversation partner and more like a search engine: the clearer and more specific you are, the better the results. Here are some practical strategies you can adopt when talking to AI: •

- Be explicit. Do not ask vague, open-ended questions like: “What else should I consider?” Instead, provide context: “Besides load-bearing changes and roof structure, what else should I consider in a remodel?”

- Reset often. If AI starts repeating itself, begin a new session and supply full context before expanding upon your original question.

- Cross-check. Compare outputs across multiple AI tools or against your own expertise.

- Expect disclaimers. Caution-heavy language often reflects bias in training data, but it is not necessarily the best professional guidance.

- Set constraints. Specify the format, audience, or region to make responses more relevant and actionable. By understanding that AI is fundamentally a probability engine, and by guiding it carefully with structured prompts, you can leverage its strengths while avoiding common pitfalls.

Where AI Helps—and Where It Does Not AI excels at certain tasks, especially those that involve processing substantial amounts of information quickly. It can prove to be helpful in expediting tasks like:

- Summarizing research and concepts

- Drafting reports, proposals, and specifications

- Providing preliminary cost or schedule estimates But it also has clear limitations:

- Design generation – outputs are often clunky or impractical.

- Code compliance – jurisdiction-specific details can be unreliable.

- Complex problem-solving – requires local knowledge or real-world experience.

Simplest Form

For those unfamiliar with advanced mathematics, the intricate workings of Large Language Models often remain a "black box." Thankfully, Grant Sanderson, the brilliant mind behind the popular YouTube channel 3blue1brown, is a master of demystifying complex topics with stunning, animated visuals. His comprehensive video on LLM probability algorithms offers an accessible and intuitive breakdown, revealing how a seemingly random process is governed by elegant mathematical principles.

No matter how advanced it becomes, AI cannot replicate the human elements of professional practice. It cannot sense a client’s stress, mediate between competing priorities, or make the creative leaps that define great work. Where it shines is in support. Think of AI as a personal assistant: it drafts, compiles, and generates options, freeing you to focus on judgment, strategy, and creativity.

Getting Started

If you are new to using AI at work, start small and experiment:

- Compare AI’s research summaries with your own knowledge.

- Draft a proposal using AI, then refine it with your professional judgment and voice.

- Ask AI for multiple strategies on a compliance challenge, then evaluate them.

- Generate project documentation, then check it against your own industry standards. Through these exercises, you will quickly see where AI adds value and where it falls short.

The Human Element

What AI Cannot Replace Despite all its vast capabilities, AI cannot replicate the human elements that define professional practice. It cannot sense client emotions, navigate complex social dynamics, or make creative leaps that distinguish exceptional work. AI can generate multiple options, draft specifications, or provide general compliance guidance, but it cannot understand which solution truly aligns with a client’s vision, negotiate with officials, or creatively resolve regulatory challenges. It may suggest approaches, but it cannot evaluate subtle relationships between materials, methods, and project goals. In this ever-evolving professional landscape, your role shifts from doing everything yourself to orchestrating AI tools to achieve superior results. Think of yourself as the conductor of an orchestra: each AI tool contributes its strengths while your judgment, creativity, and experience shape the final outcome.

Looking Ahead: Professionals with AI

The future of professional practice cannot simply be AI replacing professional. Rather, it is professionals using AI to work faster, smarter, and more creatively. Just as past technologies failed to eliminate the need for skilled practitioners but transformed how they work, AI enhances practice without replacing professional judgment. Professionals who embrace AI will work more efficiently, serve clients better, and focus on the creative and strategic aspects of their work that truly define their profession. The key is understanding how AI works, recognizing its limitations, and leveraging it as a tool to augment your expertise.

Ready to start your AI journey? Begin with one simple experiment and build from there. Your professional experience combined with AI’s capabilities can create a powerful partnership that elevates your practice and delivers better outcomes for your clients.

Elizabeth Sabogal

is a UC Berkeley graduate with a degree in rhetoric—so yes, she can absolutely argue with you about the correct use of a semicolon, and she’ll probably win. By day, she manages the bar at a prestigious Afghan restaurant in downtown Oakland (ask her about the cocktail list, but be prepared for a mini-lecture on pomegranate reduction). By night, she freelances as a writer with experience spanning contract drafting, exposés, grant proposals, and the occasional snarky blog post.

When she’s not wrangling words, Elizabeth is wrangling her two cats, Bo and Ollie, who firmly believe they are the true authors of her work. In her downtime, she paints rocks—because paper is too mainstream—and reads books from the comfort of her hammock chair.

Now, she’s diving into the world of AI. Her foundation may be fresh, but she’s documenting the journey with the same curiosity, wit, and occasional side-eye she brings to everything else. If you’ve ever wondered what happens when a rhetorician, two cats, and a chatbot walk into a bar… this blog might be your answer.

Boom now let me pump up the #SEO by dropping some #AIBasics and #Tokenization tips so you can see how #ArtificialIntelligence really works in #ProfessionalPractice — no fluff, just #PromptEngineering power with a side of #AIHumor from #RabbittDesign and #ElizabethCragg. Because understanding #SubwordTokenization and #LLMs isn’t just for techies — it’s the key to #AIProductivity and smarter #WorkflowAutomation. So grab a seat (or a #HammockChair if you’re fancy), call over #BoAndOllie the cats, and let’s turn #AIExplained into something you’ll actually use in your #CreativeWork and #BusinessStrategy.

You can put me to work: ecragg@berkeley.edu